Table of Contents

Introduction

Ever spend half your day in Excel, only to forget the formula you used yesterday?

Formulas are powerful and save hours of work, but remembering every syntax, especially for things like VLOOKUP or COUNTIF, can be frustrating.

Imagine typing what you want in plain English and watching Excel write the correct formula for you.

That is exactly what this project shows. You will build an AI-powered Excel Formula Generator using Python and Hugging Face’s T5 model. T5 already understands natural language, and with some fine-tuning, we will teach it to translate your plain-English requests into working Excel formulas.

In this guide, you will train it on ten core Excel functions such as SUM, VLOOKUP, and IF to prove the concept and explore how AI can automate one of the most repetitive parts of everyday work.

Building the Training Dataset

Every AI model needs data to learn from, and ours is no exception. We couldn’t just find a pre-made list of English commands and their matching Excel formulas, so we had to create our own. This is the most foundational part of the project: building a high-quality training dataset. The goal is simple: create thousands of pairs, each linking a natural language instruction (like “add up the numbers in column A”) to the correct formula (=SUM(A:A)).

Choosing Our Core Functions

To keep our project focused and manageable, the first thing we did was define its scope. We selected 10 of the most widely-used Excel functions that cover a range of operations, from simple math to conditional logic.

Our target functions for this project are:

SUMAVERAGECOUNTMAXMINIFCOUNTIFSUMIFVLOOKUPXLOOKUP

Using Python to Generate Data

Now for the creative part. To generate thousands of unique examples for our dataset, we wrote a small Python “generator” function for each of our 10 Excel functions. Think of a generator as a tiny script that produces a random instruction and its matching formula every time we run it.

Here is the generator for the SUM function, which shows exactly how this process works.

import random

def gen_SUM():

"""

Generate a natural language instruction and Excel formula for SUM.

Returns: (nl_string, formula_string, label)

"""

col = random.choice(list("ABCDEFGHIJKLMNOPQRSTUVWXYZ"))

nl_options = [

f"Sum all values in column {col}",

f"Add up numbers in column {col}",

f"Total of column {col}"

]

nl = random.choice(nl_options)

formula = f"=SUM({col}:{col})"

return nl, formula, "SUM"

The code is quite straightforward:

- First, it randomly picks a column letter from A to Z.

- Next, it randomly selects one of three different ways to phrase the instruction (e.g., ‘Total of column C’).

- Finally, it assembles the correct formula, like

=SUM(C:C).

By running this simple function thousands of times, we get a diverse set of examples for the model to learn from. We repeated this process for all 10 of our chosen functions.

Simplifying the Dataset: To help our model learn the core patterns effectively, we made a key simplification in our dataset. For this prototype, all our formulas apply to the entire column (e.g., C:C) instead of a specific range like C2:C20. This ensures the training data is clean and consistent.

Handling Complex Logic with the IF Function

The generator pattern works great for simple functions, but can it handle something with more moving parts? Absolutely. Let’s look at the generator for the IF function, which is more complex because it involves a logical condition.

An IF formula needs three key elements:

- A condition to test (e.g.,

C5 > 37). - A value to return if the condition is true (e.g.,

"Pass"). - A value to return if the condition is false (e.g.,

"Fail").

To make our dataset realistic, the generator needs to create variety in all three parts. Here is the code:

def gen_IF():

"""

Generate a natural language instruction and Excel formula for IF.

"""

col = random.choice(list("ABCDEFGHIJKLMNOPQRSTUVWXYZ"))

row = random.randint(1, 20)

num = random.randint(1, 100)

op = random.choice([">", "<", ">=", "<=", "="])

true_val = random.choice(["Yes", "Pass", "1", "TRUE"])

false_val = random.choice(["No", "Fail", "0", "FALSE"])

nl_options = [

f"If value in cell {col}{row} {op} {num}, return {true_val}, otherwise {false_val}",

f"Check if {col}{row} {op} {num}, then {true_val}, else {false_val}"

]

nl = random.choice(nl_options)

formula = f'=IF({col}{row}{op}{num},"{true_val}","{false_val}")'

return nl, formula, "IF"

To capture the different ways an IF statement can be used, this generator introduces randomness in several places:

- A random column letter (A-Z) and row number (1-20) to create a target cell like

C5orX18. - A random comparison operator (

>,<,=, etc.). - A random number (1-100) for the condition.

- Random outputs for the true/false results, like

"Pass"/"Fail"orTRUE/FALSE.

Each time it runs, it produces a complete pair, like this:

- Instruction:

"If value in cell C5 > 37, return TRUE, otherwise FALSE" - Formula:

=IF(C5>37,"TRUE","FALSE")

This design gives our dataset enough variation for the model to learn the structure of an IF formula effectively. Of course, we’ve still kept it simple for this prototype; for example, the conditions are limited to simple numeric comparisons, and we don’t combine multiple conditions with AND/OR.

Want to dive into the code yourself? The full project, including all generators and the complete training setup, is available on My GitHub.

Generating and Splitting the Dataset

Now that we have our 10 generator functions, it’s time to put them to work. In this step, we’ll run each generator repeatedly to build our complete dataset. We will then split it into three standard sets used in machine learning: training, validation, and testing.

Creating a Balanced Dataset

A key goal here is to create a balanced dataset. This means we want an equal number of examples for each of the 10 functions. If we had 5,000 SUM examples but only 100 VLOOKUP examples, the model would become an expert at SUM but would fail at VLOOKUP.

To ensure balance, we’ll generate exactly 1,000 examples per function. This gives us a total of 10,000 high-quality training pairs. We then shuffle the entire dataset to mix all the functions together randomly.

The Python code below handles this process: it loops through all our generators, calls each one 1,000 times, and collects the results into one big list.

from collections import Counter

import random, os, json

OUT_DIR = "excel_nl_dataset"

os.makedirs(OUT_DIR, exist_ok=True)

N_PER_FUNC = 1000 # number of examples per function

VAL_FRACTION = 0.15 # 15% for validation

TEST_FRACTION = 0.15 # 15% for testing

# Collect all generator functions into one list

GENERATORS = [

gen_SUM,

gen_AVERAGE,

gen_COUNT,

gen_MAX,

gen_MIN,

gen_IF,

gen_COUNTIF,

gen_SUMIF,

gen_VLOOKUP,

gen_XLOOKUP

]

def generate_examples():

data = []

label_count = Counter()

for gen in GENERATORS:

for _ in range(N_PER_FUNC):

nl, formula, label = gen()

data.append((nl, formula, label))

label_count[label] += 1

random.shuffle(data)

return data, label_count

data, label_count = generate_examples()

print("Total examples:", len(data))

print("Per function:", dict(label_count))

After running the code, the output confirms our dataset is perfectly balanced:

Total examples: 10000

Per function: {'SUM': 1000, 'AVERAGE': 1000, 'COUNT': 1000, 'MAX': 1000, 'MIN': 1000, 'IF': 1000, 'COUNTIF': 1000, 'SUMIF': 1000, 'VLOOKUP': 1000, 'XLOOKUP': 1000}

Splitting the Data and Saving Our Work

With our 10,000 examples ready, the final step is to divide them. We can’t use the same data to teach the model and to test it—that would be like giving a student the answers to a test before they take it!

So, we split our data into three standard sets:

- Training Set (70% or 7,000 examples): The main dataset the model learns from.

- Validation Set (15% or 1,500 examples): Used during training to check the model’s progress.

- Test Set (15% or 1,500 examples): Kept separate and only used at the very end to evaluate how well our final model performs on unseen data.

After splitting, we save each set as a simple .txt file (train.txt, val.txt, and test.txt). Each line in the file contains an instruction and its formula, separated by a tab. This clean format is easy to work with in the next steps of the project.

A Note on Dataset Size

In the code, we chose to generate 1,000 examples per function. Is this number set in stone? Not at all. You can easily increase it by changing the N_PER_FUNC variable to 5,000 or 10,000.

How does changing this number affect the project?

- More Data, Better Quality: Generally, more data leads to a better, more robust model. A larger dataset with more variations would help the model understand more diverse ways of phrasing a request and reduce the chance of it memorizing specific examples.

- Longer Training Time: The trade-off is time. A dataset with 50,000 examples will take roughly five times longer to train than our dataset of 10,000.

- Diminishing Returns: For a focused project like this one with simple patterns, 1,000-2,000 examples per function is often enough to get excellent results. Going from 10,000 to 100,000 examples might only improve accuracy by a tiny fraction while increasing training time tenfold.

For this prototype, 10,000 total examples provided a great balance between model quality and efficient training on a personal laptop.

Preprocessing the Dataset for the AI Model

Our dataset is currently a collection of .txt files, but an AI model like T5 doesn’t understand plain text. It only understands numbers. The process of converting our text into a format the model can work with is called preprocessing.

The main goal here is to turn our natural language instructions and Excel formulas into numerical sequences that T5 can process. This involves a crucial step called tokenization.

What is Tokenization?

Tokenization is the process of breaking text into smaller pieces, called “tokens,” and then mapping each token to a unique number (or ID). We use a pre-built tokenizer from Hugging Face that was specifically made for the T5 model. If terms like “tokenization” or “model” are new to you, don’t worry, you can check out my AI/ML Glossary for simple definitions.

For example, the instruction "Sum all values in column C" gets broken down into tokens like ["Sum", "all", "values", "in", "column", "C"] and then converted into a sequence of numbers like [12198, 66, 2620, 16, 6710, 205, 1].

Here’s the code that loads the tokenizer and processes a single example:

from transformers import T5Tokenizer

# Load tokenizer for T5-small

tokenizer = T5Tokenizer.from_pretrained("t5-small")

# Take one sample from the training set

sample_nl, sample_formula = "Sum all values in column C", "=SUM(C:C)"

# Tokenize the input (natural language instruction)

encoding = tokenizer(

sample_nl,

padding="max_length", # Make all sequences the same length

truncation=True, # Cut off if text is too long

max_length=32, # Max number of tokens for input

return_tensors="pt" # Return PyTorch tensors

)

# The target formula is also tokenized in the same way

The tokenizer produces three key outputs:

input_ids: The numerical representation of the text.attention_mask: A list of 1s and 0s that tells the model which tokens are real and which are just padding added to make the sequence a uniform length.labels: The tokenized version of the correct output (the Excel formula).

Building a PyTorch Dataset and DataLoader

Now that we know how to convert one example, we need an efficient way to do this for all 10,000 examples and feed them to the model in batches. For this, we use two standard PyTorch utilities: Dataset and DataLoader.

- A custom

Datasetclass wraps our collection of text pairs. Its job is to grab a single instruction-formula pair and return it as a properly tokenized set of tensors. - The

DataLoadertakes ourDatasetand handles the heavy lifting of shuffling the data and grouping it into mini-batches (we used a batch size of 16) for efficient training.

This combination is the standard pipeline for preparing data for model training in PyTorch. With our DataLoader ready, our data is now fully prepared to be fed directly into the T5 model.

Training the Model: Optimizer, Loss Function, and Results

With our data perfectly preprocessed and ready to go, we’ve arrived at the most exciting part: training the AI model. In this step, we’ll load the pre-trained T5 model from Hugging Face, set up our training components, and then run the training loop to teach our model how to become an Excel Formula Generator.

Loading the T5 Model

First, we need to load our base model. We’re using T5-small, a powerful yet compact transformer model. I chose the “small” version because it’s efficient enough to be trained on a personal laptop with a standard GPU, making this project accessible to almost anyone.

The model has two main parts: an encoder that reads and understands our instruction, and a decoder that generates the Excel formula.

from transformers import T5ForConditionalGeneration

import torch

# Load pretrained T5-small

model = T5ForConditionalGeneration.from_pretrained("t5-small")

# Move model to GPU if available

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = model.to(device)

print("Model loaded on:", device)

The Optimizer and Loss Function

To train a model, we need two more components: an optimizer and a loss function. If you’d like a refresher on these core concepts, you can check out my AI/ML Glossary for simple definitions.

- The Optimizer (

AdamW): Think of the optimizer as the engine that drives the learning process. It adjusts the model’s internal parameters in tiny steps to get it closer to the correct answer with each example it sees. We’re usingAdamW, a popular and effective optimizer for transformer models. - The Loss Function: This is the model’s report card. It measures how “wrong” the model’s prediction is compared to the true formula. The goal of training our Excel Formula Generator is to make this loss value as low as possible. Luckily, Hugging Face’s T5 model automatically calculates the loss for us when we provide it with the correct labels.

The Training Loop

The training loop is where the learning actually happens. We’ll run our model through the entire training dataset multiple times. Each full pass over the data is called an epoch.

In each epoch, we do two things:

- Training Phase: The model processes batches of data, makes predictions, checks its “report card” (the loss), and the optimizer updates its parameters to get better.

- Validation Phase: After learning, we evaluate the model on the validation set. This is a crucial check to ensure the model is genuinely learning patterns and not just memorizing the answers.

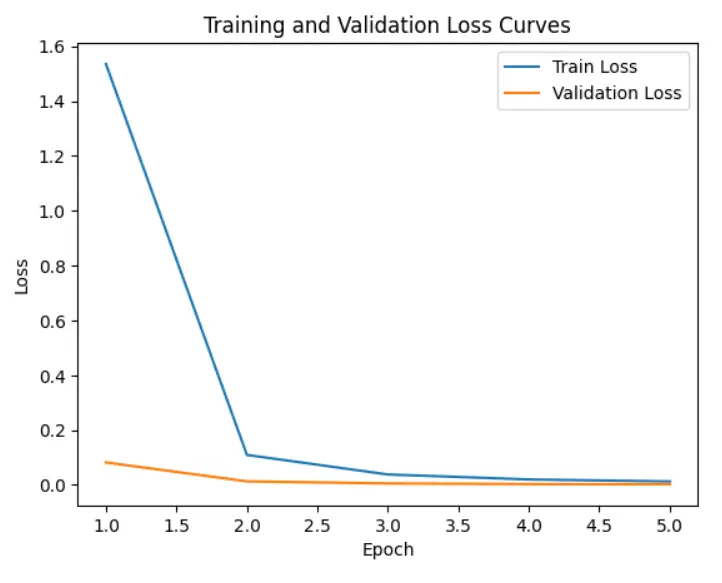

After running the training loop for 5 epochs, here are the results:

Epoch 1/5 | Train Loss: 1.5355 | Val Loss: 0.0820

Epoch 2/5 | Train Loss: 0.1094 | Val Loss: 0.0131

Epoch 3/5 | Train Loss: 0.0382 | Val Loss: 0.0054

Epoch 4/5 | Train Loss: 0.0200 | Val Loss: 0.0030

Epoch 5/5 | Train Loss: 0.0127 | Val Loss: 0.0026

As you can see, the Train Loss and Val Loss both decreased steadily and ended up very close to zero. This is a fantastic result! It tells us that the model learned the patterns in our data effectively.

Evaluating the Model on Test Data

Training is complete, and our loss is low, but how do we know if the model is actually any good? We need to test it. We’ll use the Test Set—that 15% of our data we held back and didn’t use for training or validation. This gives us an unbiased measure of the model’s real-world performance.

Checking Some Examples

First, let’s just look at a few random examples from the test set and compare the model’s predicted formula to the true formula. This gives us a qualitative feel for its accuracy.

model.eval()

test_samples = random.sample(test_pairs, 5)

for nl, true_formula in test_samples:

# Encode input

input_ids = tokenizer(nl, return_tensors="pt").input_ids.to(device)

# Generate prediction

pred_ids = model.generate(input_ids, max_length=32)

pred_formula = tokenizer.decode(pred_ids[0], skip_special_tokens=True)

print("Instruction:", nl)

print("True Formula:", true_formula)

print("Predicted Formula:", pred_formula)

print("-" * 50)

The output shows that for a variety of instructions, the predictions are spot-on:

Instruction: Compute mean for column M

True Formula: =AVERAGE(M:M)

Predicted Formula: =AVERAGE(M:M)

--------------------------------------------------

Instruction: Sum values in column R where column A is > 38

True Formula: =SUMIF(A:A,">38",R:R)

Predicted Formula: =SUMIF(A:A,">38",R:R)

--------------------------------------------------

Instruction: Maximum value in column E

True Formula: =MAX(E:E)

Predicted Formula: =MAX(E:E)

Measuring Exact Match Accuracy

Looking at a few examples is encouraging, but we need a more rigorous metric. For a task like this, the best measure is Exact Match Accuracy. It’s very simple: what percentage of the predicted formulas are exactly the same as the correct formulas?

After running our model over the entire test set of 1,500 examples, we got the following result:

Exact Match Accuracy on Test Set: 83.00%

This is an excellent result! An accuracy of 83% means that our Excel Formula Generator is highly reliable for the 10 functions it was trained on. The high score shows that the model didn’t just memorize the training data; it successfully learned the underlying patterns and can generalize to new, unseen instructions.

Testing with Custom Queries

Our model scored an 83% accuracy on the test set, which is fantastic. But how does it feel to use it in practice? In this final step, we’ll move beyond our pre-made dataset and try out a few custom instructions written from scratch.

This is the best way to see how the Excel Formula Generator handles phrasing it may not have seen before and to identify both its strengths and its limitations.

Here are a few custom queries we tested:

#Interactive Testing with Custom Queries

# Examples of custom instructions

custom_instructions = [

"Add up all values in column B",

"Find the maximum value in column K",

"If value in cell D10 <= 50, return Pass, otherwise Fail",

"Look up the value in A7 and return the matching value from column B",

]

# ... code to predict formula for each instruction ...

The Results

The model performed very well on most queries, but it also revealed a limitation:

- Instruction:“Add up all values in column B”

- Predicted:

=SUM(B:B)✅

- Predicted:

- Instruction:“Find the maximum value in column K”

- Predicted:

=MAX(K:K)✅

- Predicted:

- Instruction:“Look up the value in A7 and return the matching value from column B”

- Predicted:

=XLOOKUP(A7,A:A,B:B)✅

- Predicted:

- Instruction:“If value in cell D10 <= 50, return Pass, otherwise Fail”

- Predicted:

=IF(D10=50,"Pass","Fail")⚠️

- Predicted:

Interpretation

The model correctly handled SUM, MAX, and even the more complex XLOOKUP without any issues. However, it struggled with the IF statement containing a <= (less than or equal to) operator, simplifying it to just =.

This highlights an important takeaway: the model’s performance is directly tied to the diversity of its training data. While our dataset included different operators, it seems we need more examples for symbols like <= and >= to improve accuracy in those specific cases. This is a perfect example of a clear next step for improving the project in the future.

Conclusion and Next Steps

In this project, we successfully built a complete pipeline to generate Excel formulas from natural language using T5-small and PyTorch.

What We Achieved

- We designed and generated a balanced dataset of 10,000 examples covering 10 common Excel functions.

- We preprocessed the data and fine-tuned a T5-small transformer model to translate English instructions into Excel formulas.

- Our final model achieved an impressive 83% exact match accuracy on the unseen test set, showing that it learned the patterns effectively.

- We verified the results with both quantitative metrics and custom query testing, proving the model can generate correct formulas in most cases.

Key Insights and Future Improvements

This project demonstrates how accessible and powerful modern NLP tools have become for solving practical, real-world tasks. Even with a simple dataset and limited hardware, we were able to achieve excellent results.

The main takeaway is that model performance is directly tied to the diversity of the training data. While our Excel Formula Generator is highly effective, future improvements could include:

- Adding more training examples for operators like

<=and>=to improve accuracy on more complex conditions. - Expanding the dataset to include nested formulas (e.g.,

SUM(IF(...))) to make the system more powerful. - Deploying the model into an interactive app for real users.

If you’d like to explore the code in more detail, you can find the complete project, including all the data generators and the training script, on My GitHub. Thanks for following along!