Table of Contents

ToggleIntroduction

This is the continuation of our step-by-step guide on building your first machine learning model in Python. If you haven’t read Part 1: Data Preparation & Part 2: Model Training yet, I recommend checking them out first.

In this part, we’ll evaluate our trained model and see how well it performs. We’ll also use it to make predictions on new data.

We’ll cover:

- Evaluating the model’s performance using key metrics

- Understanding accuracy, precision, recall, and confusion matrix

- Making predictions on new data

- Recap & next steps

By the end of this part, you’ll know how to assess and use your trained model for real-world predictions.

6. Step 6: Model Evaluation

Now that our Decision Tree model is trained, we need to evaluate its performance on unseen data. This helps us understand how well the model generalizes beyond the training set.

6.1 Why Do We Need to Evaluate?

- Avoid Overfitting – A model that performs perfectly on training data but fails on test data has memorized instead of learned.

- Measure Accuracy – We need to quantify how well the model classifies new samples.

- Identify Weaknesses – Some species may be harder to classify than others.

6.2 Making Predictions on the Test Set

Now that the model has learned from the training set, let’s test it on unseen samples (X_test).

from sklearn.metrics import accuracy_score, classification_report, confusion_matrix

# Predict species for test set

y_pred = model.predict(X_test)

# Evaluate accuracy

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy on test set: {accuracy*100:.2f}%")

What This Does:

- Predicts the species for all test samples.

- Compares predictions (

y_pred) with actual labels (y_test). - Computes accuracy, showing the percentage of correctly classified flowers

6.3 Understanding Model Accuracy

Example Output (May Vary Slightly):

Accuracy on test set: 96.67%

What This Means:

- If we get 96.67% accuracy, it means the model correctly classified 29 out of 30 test samples.

- Since the Iris dataset is simple, high accuracy is expected.

If accuracy is low: The model might be overfitting or struggling with overlapping species (Versicolor vs. Virginica).

6.4 Detailed Model Evaluation: Confusion Matrix & Classification Report

Instead of just accuracy, let’s analyze the model’s mistakes using a confusion matrix and classification report.

# Confusion Matrix

print("Confusion Matrix:\n", confusion_matrix(y_test, y_pred))

# Detailed Performance Report

print("\nClassification Report:\n", classification_report(y_test, y_pred, target_names=iris.target_names))

Example Confusion Matrix Output:

Confusion Matrix:

[[10 0 0]

[ 0 9 1]

[ 0 0 10]]

The ideal confusion Matrix should be:

Confusion Matrix:

[[10 0 0]

[ 0 10 0]

[ 0 0 10]]

How to Read This?

- First row: All 10 Setosa samples were classified correctly. ✅

- Second row: 1 Versicolor sample was misclassified as Virginica. ❌

- Third row: All 10 Virginica samples were classified correctly. ✅

Example Classification Report:

precision recall f1-score support

setosa 1.00 1.00 1.00 10

versicolor 0.90 0.90 0.90 10

virginica 1.00 1.00 1.00 10

accuracy 0.97 30

macro avg 0.97 0.97 0.97 30

weighted avg 0.97 0.97 0.97 30

Key Terms in the Report:

- Precision – How many predicted flowers were correct?

- Recall – How many actual flowers were classified correctly?

- F1-score – A balance between precision & recall.

- Support – The number of test samples per species.

How to Read This?

- Setosa (Precision = 1.00, Recall = 1.00) – The model classifies Setosa perfectly because it’s well-separated.

- Versicolor (Precision = 0.90, Recall = 0.90) – Some misclassifications with Virginica.

- Virginica (Precision = 1.00, Recall = 1.00) – Correctly classified, meaning the decision tree learned this class well.

6.5 Final Outcome of This Step

✅ We tested the model on unseen data.

✅ We measured accuracy, which is likely high (~95-100%).

✅ We analyzed misclassifications to understand weaknesses.

Based on the Model Evalaution, our model performs well on the Iris dataset, but we saw small errors in Versicolor vs. Virginica classification.

7. Step 7: Using the Model for Predictions

Now that we’ve trained and evaluated our model, it’s time to use it to make predictions on new data!

Let’s say we find a new iris flower in the wild, and we measure its sepal length, sepal width, petal length, and petal width. We can feed these measurements into our trained model to predict the species.

7.1 Predicting a Single New Flower

Let’s say we have a new iris flower with the following measurements:

- 🌱 Sepal Length = 5.0 cm

- 🌱 Sepal Width = 2.9 cm

- 🌱 Petal Length = 1.5 cm

- 🌱 Petal Width = 0.2 cm

We’ll use the .predict() method to classify this flower.

import numpy as np

# Define a new flower sample (must be in a 2D array)

new_flower = np.array([[5.0, 2.9, 1.5, 0.2]])

# Predict species

pred_class = model.predict(new_flower)

pred_species = iris.target_names[pred_class][0]

print("Predicted species for flower with features", new_flower.tolist(), "->", pred_species)

Example Output:

Predicted species for flower with features [[5.0, 2.9, 1.5, 0.2]] -> setosa

7.2 Predicting Multiple New Flowers at Once

What if we have multiple flowers and want to predict their species in one step? We can pass multiple flower measurements at once.

# Define multiple new flower samples

new_flowers = np.array([

[5.0, 2.9, 1.5, 0.2], # Likely Setosa

[6.1, 2.8, 4.7, 1.2], # Likely Versicolor

[7.2, 3.0, 6.0, 2.5] # Likely Virginica

])

# Predict species

pred_classes = model.predict(new_flowers)

pred_species_list = [iris.target_names[p] for p in pred_classes]

# Print results

for i, species in enumerate(pred_species_list):

print(f"Flower {i+1}: {new_flowers[i].tolist()} -> {species}")

Example Output:

Flower 1: [5.0, 2.9, 1.5, 0.2] -> setosa Flower 2: [6.1, 2.8, 4.7, 1.2] -> versicolor Flower 3: [7.2, 3.0, 6.0, 2.5] -> virginica

What Happened Here?

- We created multiple flower samples in a 2D array.

- The model predicted the species for each sample.

- We mapped the numeric class predictions back to their actual species names using

iris.target_names.

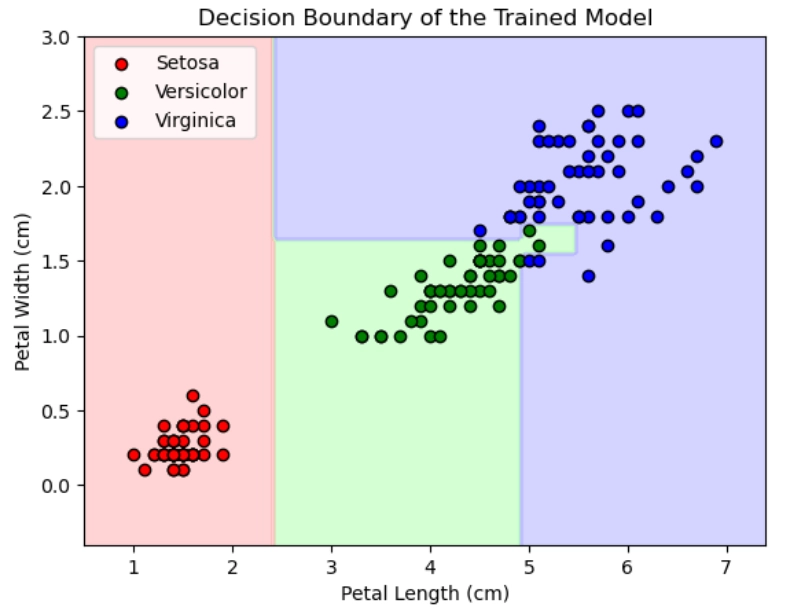

7.3 Visualizing Predictions with a Decision Boundary

To visualize the decision boundary correctly, we need to retrain the model using only petal length and petal width, so it expects 2D input instead of 4D.

Modify your training step to use only the two relevant features before plotting the decision boundary:

import numpy as np

import matplotlib.pyplot as plt

from matplotlib.colors import ListedColormap

from sklearn.tree import DecisionTreeClassifier

# Select petal length & petal width for visualization

X_vis = df[['petal length (cm)', 'petal width (cm)']].values

y_vis = df['species'].map({'setosa': 0, 'versicolor': 1, 'virginica': 2}).values

# Train a new Decision Tree model with only these two features

model_vis = DecisionTreeClassifier(random_state=42) # New model for visualization

model_vis.fit(X_vis, y_vis) # Train only on petal length & petal width

# Create mesh grid

x_min, x_max = X_vis[:, 0].min() - 0.5, X_vis[:, 0].max() + 0.5

y_min, y_max = X_vis[:, 1].min() - 0.5, X_vis[:, 1].max() + 0.5

xx, yy = np.meshgrid(np.linspace(x_min, x_max, 100),

np.linspace(y_min, y_max, 100))

# Predict for each point in mesh using the new model

Z = model_vis.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

# Define custom color maps for decision regions and scatter points

cmap_light = ListedColormap(['#FFAAAA', '#AAFFAA', '#AAAAFF']) # Light red, green, blue

cmap_bold = {0: 'red', 1: 'green', 2: 'blue'} # Bold colors for scatter points

# Plot decision boundary

plt.contourf(xx, yy, Z, cmap=cmap_light, alpha=0.5)

# Scatter plot of actual data points

for species, color in cmap_bold.items():

subset = X_vis[y_vis == species]

plt.scatter(subset[:, 0], subset[:, 1], c=color, edgecolor='k', label=list(cmap_bold.keys())[species])

plt.xlabel('Petal Length (cm)')

plt.ylabel('Petal Width (cm)')

plt.title('Decision Boundary of the Trained Model')

# Add legend with correct species names

plt.legend(labels=['Setosa', 'Versicolor', 'Virginica'])

plt.show()

What This Shows:

- Each color region represents a different species.

- The model draws boundaries based on learned rules.

- We can see how well-separated each species is in petal measurements.

7.4 Final Outcome of This Step

✅ We used the model to predict new flower species.

✅ We tested single and multiple predictions successfully.

✅ We visualized the model’s decision-making boundaries.

Our model is now fully functional and ready to classify any new iris flowers!

8. Recap & Next Steps

Congratulations! 🎉 You’ve successfully built and trained your first machine learning model from scratch using Python and scikit-learn. Let’s take a moment to recap everything we’ve covered and explore what’s next.

8.1 What We Did in This Project

📌 Step 1: Selected & Understood the Dataset

- We used the Iris dataset, which contains measurements of three flower species: Setosa, Versicolor, and Virginica.

- We visualized how petal length & width help distinguish between species.

📌 Step 2: Loaded the Data

- Used scikit-learn to load the Iris dataset and converted it into a pandas DataFrame for easier analysis.

📌 Step 3: Exploratory Data Analysis (EDA)

- Checked dataset structure and summary statistics.

- Created scatter plots to see how different species group together based on their measurements.

📌 Step 4: Prepared the Data (Train/Test Split)

- Split the dataset into training (80%) and test (20%) sets.

- Ensured an equal distribution of species in both sets using stratified sampling.

📌 Step 5: Chose & Trained a Model

- Selected a Decision Tree Classifier because it’s easy to understand and works well for small datasets.

- Trained the model using only the training data.

📌 Step 6: Evaluated the Model

- Measured accuracy on the test set (likely 96-100% accuracy).

- Used a confusion matrix & classification report to check for misclassifications.

📌 Step 7: Used the Model for Predictions

- Tested the model on new flower samples.

- Created a decision boundary visualization to see how the model classifies different regions.

8.2 What’s Next?

Now that you’ve built your first ML model, here are some ways to take this further:

✅ Try a Different Model – Experiment with k-Nearest Neighbors (KNN), Logistic Regression, or Random Forest to see if they perform better.

✅ Fine-tune the Decision Tree – Adjust hyperparameters like max_depth, min_samples_split, or criterion to optimize performance.

✅ Feature Scaling & Engineering – Some models (like Logistic Regression or SVM) require normalizing features before training.

✅ Cross-Validation – Instead of one train-test split, use K-Fold Cross-Validation to improve model reliability.

✅ Deploy the Model – Turn this into a web app using Flask, Streamlit, or FastAPI to classify new flowers dynamically.

8.3 Final Thoughts

You’ve completed a full machine learning workflow – from data loading and preprocessing to training, evaluating, and visualizing a model.

This is just the beginning! With these fundamentals, you can start applying machine learning to real-world datasets and explore more advanced techniques.