Table of Contents

ToggleIntroduction

If you’re exploring deep learning or starting with PyTorch, you’ve likely encountered the term “tensor.” Tensors are the fundamental data structure in frameworks like PyTorch (and TensorFlow), serving as building blocks for nearly all machine learning tasks.

But what exactly is a tensor?

In simple terms, a tensor is an n-dimensional array of numbers—a generalization of scalars (single numbers), vectors (lists), and matrices (tables of numbers) to any number of dimensions. Don’t worry if this sounds abstract! In this post, we’ll clearly explain tensors in a beginner-friendly manner, showing easy-to-understand analogies, simple PyTorch code examples, and practical explanations.

By the end of this article, you’ll confidently understand:

- What tensors are (using intuitive analogies)

- How to create and manipulate tensors in PyTorch

- Key tensor properties (shape, data type, and device)

- Basic tensor operations and their practical use

Ready? Let’s get started!

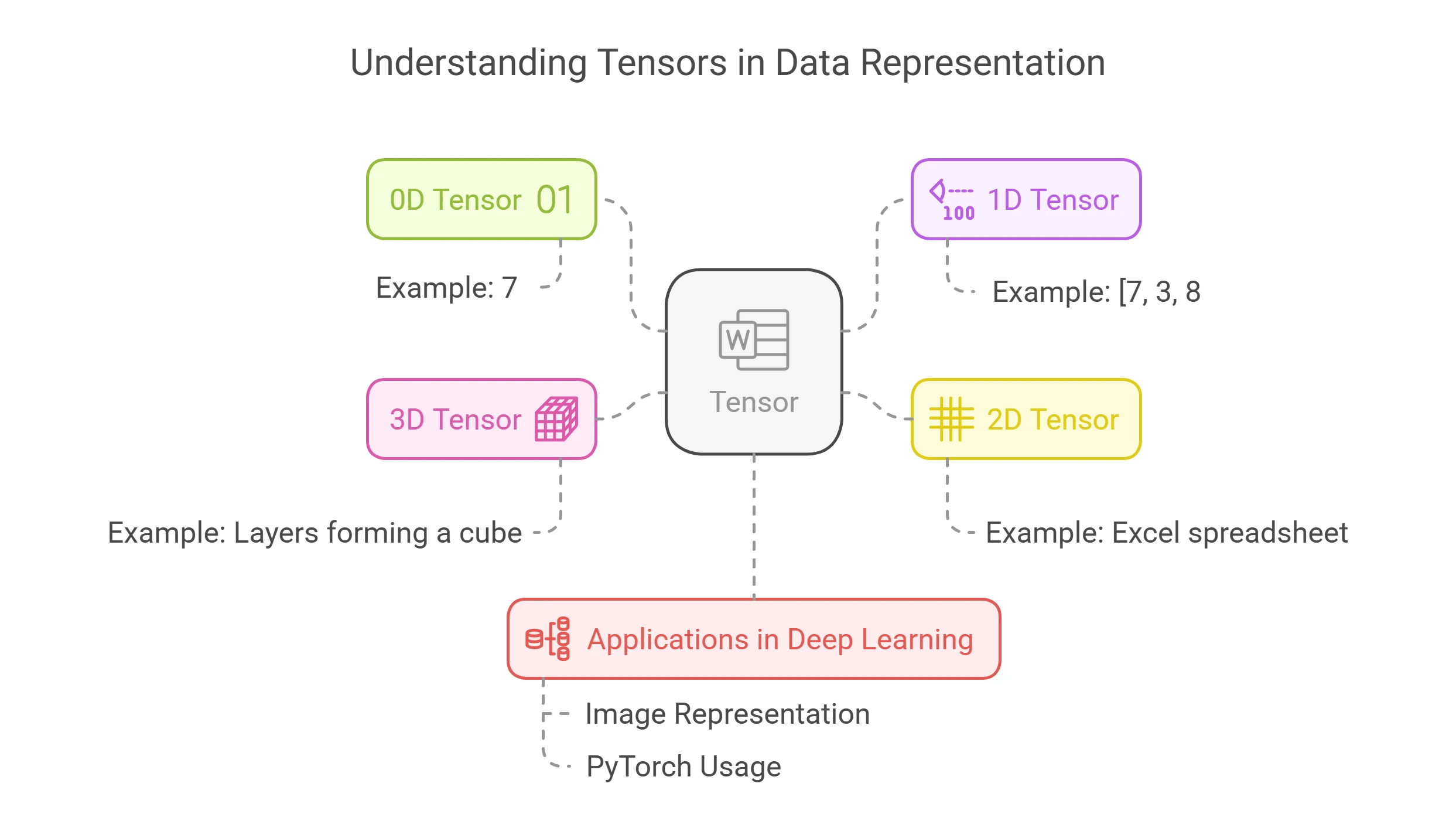

What Is a Tensor? (Think Multi-Dimensional Arrays)

A tensor is simply a way to store numerical data—it’s like an advanced multi-dimensional spreadsheet.

- 0D tensor (Scalar): A single number, like

7. - 1D tensor (Vector): A list of numbers, like

[7, 3, 8]. - 2D tensor (Matrix): A table or grid of numbers (like an Excel spreadsheet).

- 3D tensor: Imagine a stack of matrices (like layers forming a cube).

In deep learning, images are typically represented as 3D tensors (height × width × color channels). PyTorch uses tensors extensively, as they efficiently handle mathematical computations and can run on GPUs (Graphics Processing Units) for accelerated performance.

You can think of tensors like containers or boxes that neatly store numbers. Imagine a single box holding one number (scalar), a row of boxes storing multiple numbers (vector), or even a grid-like box (matrix) holding numbers arranged in rows and columns. A tensor is simply a flexible generalization—allowing you to have boxes stacked and organized in as many dimensions as needed. PyTorch uses these “number containers” to perform calculations efficiently, especially when dealing with complex data like images or neural networks.

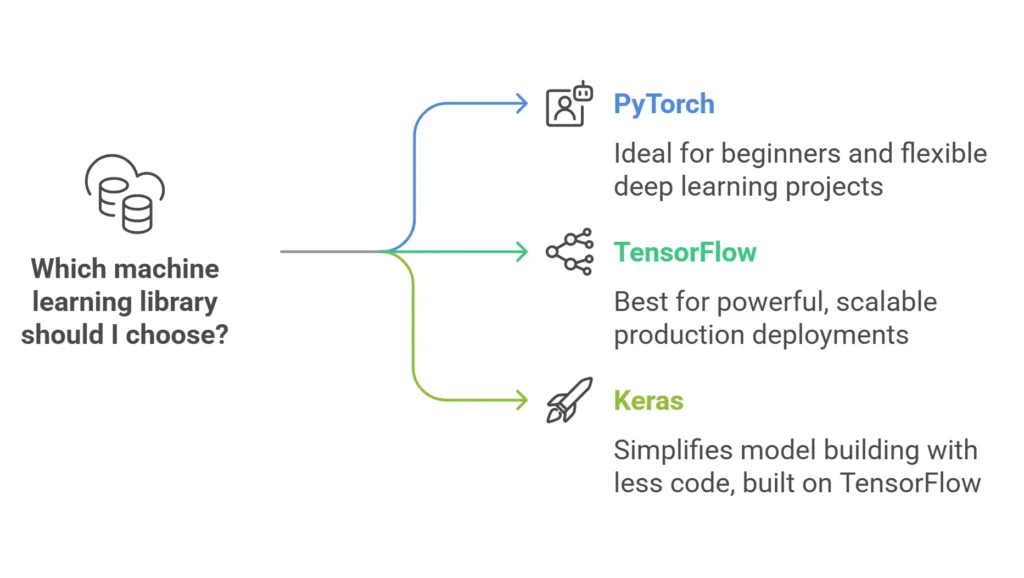

Introducing PyTorch (and Similar Libraries)

Before diving deeper into tensors, let’s briefly introduce PyTorch—a popular deep-learning library—and mention some similar libraries you might encounter on your learning journey:

PyTorch: Developed by Facebook (Meta), PyTorch is a widely-used library designed specifically for deep learning. It’s known for being beginner-friendly, intuitive, and flexible, making it a great choice to learn neural networks and deep learning.

TensorFlow (tensorflow.org): Another popular library from Google, TensorFlow is used heavily for both research and production deployment. It’s powerful and scalable, ideal for deploying machine learning models in real-world applications.

Keras (keras.io): A high-level, easy-to-use API built on top of TensorFlow, simplifying model building with less code.

All these libraries rely on the concept of tensors, which are the basic building blocks they use to handle data.

In this tutorial, we’ll use PyTorch because it’s beginner-friendly and clearly structured—perfect for understanding tensors and deep learning.

Now, let’s dive into tensors and see them in action with PyTorch!

Creating Tensors in PyTorch

Let’s dive right into creating tensors! First, import PyTorch in your Python environment:

import torch

Now, there are multiple ways to create a tensor: you can create one directly from data, or use built-in functions.

1. From Python Data:

You can create a tensor directly from Python lists or nested lists:

data = [[1, 2, 3], [4, 5, 6]] x = torch.tensor(data) print(x)

This creates a 2×3 tensor from a nested Python list (which we arranged like a 2-row matrix). If you print x, you’ll see something like:

tensor([[1, 2, 3], [4, 5, 6]])

PyTorch will infer the tensor’s shape (2 rows, 3 columns) and data type (here integers). By default, PyTorch uses 32-bit floats for tensor data if you don’t specify otherwise, but since these are ints it might make it an int64 tensor.

2. Built-in Initializers:

PyTorch provides functions to create tensors filled with certain values:

torch.zeros(shape): create a tensor of given shape filled with 0.torch.ones(shape): create a tensor filled with 1.torch.rand(shape): create a tensor with random values between 0 and 1 (uniform distribution).

a = torch.zeros((2, 3))

b = torch.ones((3, 3))

c = torch.rand((2, 2))

print("a:\n", a)

print("b:\n", b)

print("c:\n", c)

This might output:

a: tensor([[0., 0., 0.], [0., 0., 0.]]) b: tensor([[1., 1., 1.], [1., 1., 1.], [1., 1., 1.]]) c: tensor([[0.5319, 0.2023], [0.8765, 0.1980]])

Don’t worry about the exact random values in c. The point is you’ve quickly made some example tensors. Notice a and b contain floating-point numbers (even though they’re all 0s or 1s, they’re represented with a decimal point). PyTorch by default creates float tensors for zeros and ones if not told otherwise.

3. From Numpy:

If you already have a NumPy array and want to convert it to a tensor, you can use torch.from_numpy(numpy_array). Similarly, you can convert a tensor to a NumPy array using tensor.numpy(). This interoperability can be handy, especially if you use NumPy for some data loading or prep and then want to feed data to PyTorch.

import numpy as np

import torch

# Create a NumPy array

numpy_array = np.array([[1, 2, 3], [4, 5, 6]])

# Convert it to a PyTorch tensor

tensor = torch.from_numpy(numpy_array)

print("NumPy Array:\n", numpy_array)

print("Converted PyTorch Tensor:\n", tensor)

Expected Output:

NumPy Array: [[1 2 3] [4 5 6]] Converted PyTorch Tensor: tensor([[1, 2, 3], [4, 5, 6]], dtype=torch.int64)

Tensor Properties: Shape, Data Type, and Device

Every tensor has three key properties:

- Shape: Dimensions of the tensor (

tensor.shape). - Data Type (

dtype): Type of data (integer, float32, etc.). - Device: CPU or GPU location (

tensor.device).

Check them easily like this:

import torch

# Creating different types of tensors

x = torch.tensor([[1, 2, 3], [4, 5, 6]]) # Default integer tensor

y = torch.tensor([1.0, 2.0, 3.0], dtype=torch.float64) # Float64 tensor

z = torch.ones((3, 3), device='cpu') # Tensor explicitly on CPU

# Move a tensor to GPU (if available)

if torch.cuda.is_available():

z = z.to('cuda')

# Checking Tensor Properties

print("Tensor x:\n", x)

print("Shape of x:", x.shape)

print("Data type of x:", x.dtype)

print("Device of x:", x.device)

print("\nTensor y:\n", y)

print("Shape of y:", y.shape)

print("Data type of y:", y.dtype)

print("\nTensor z:\n", z)

print("Shape of z:", z.shape)

print("Device of z:", z.device) # Will print 'cuda' if moved to GPU, otherwise 'cpu'

This would output:

Tensor x: tensor([[1, 2, 3], [4, 5, 6]]) Shape of x: torch.Size([2, 3]) Data type of x: torch.int64 Device of x: cpu Tensor y: tensor([1., 2., 3.], dtype=torch.float64) Shape of y: torch.Size([3]) Data type of y: torch.float64 Tensor z: tensor([[1., 1., 1.], [1., 1., 1.], [1., 1., 1.]]) Shape of z: torch.Size([3, 3]) Device of z: cpu # or 'cuda' if GPU is available

Key Takeaways:

tensor.shapetells you the dimensions (e.g.,[2, 3]means 2 rows, 3 columns).tensor.dtypereveals the data type (e.g.,float32,int64).tensor.deviceshows whether it’s stored on CPU or GPU.- You can move tensors to GPU using

.to('cuda'), but if no GPU is available, it stays on CPU.

| Property | Description | How to Check? |

|---|---|---|

| Shape | Dimensions of the tensor | tensor.shape or tensor.size() |

| Data Type (dtype) | Type of numbers stored | tensor.dtype |

| Device | Where the tensor is stored (CPU/GPU) | tensor.device |

Basic Tensor Operations

Tensors support a wide variety of operations. You can think of these like vector/matrix operations you’d expect: addition, multiplication, indexing, reshaping, etc. Let’s go through a few common ones with examples:

- Indexing and Slicing: You can index into a tensor just like you would with a list or NumPy array.

- For a 2D tensor

x,x[0, 1]would access the element in the 0th row and 1st column. - You can slice as well:

x[0, :]would give you the first row (all columns of row 0), andx[:, 1]would give you the second column (all rows of column 1). T

- For a 2D tensor

his is very useful for extracting parts of your data. For example:

x = torch.tensor([[10, 11, 12],

[13, 14, 15]])

print("Original tensor:\n", x)

print("Element at row 0, col 2:", x[0, 2].item()) # .item() to get Python number

print("First row:", x[0, :])

print("Second column:", x[:, 1])

Output:

Original tensor: tensor([[10, 11, 12], [13, 14, 15]]) Element at row 0, col 2: 12 First row: tensor([10, 11, 12]) Second column: tensor([11, 14])

.item() converts a one-element tensor to a plain Python number (here 12). Slicing returns sub-tensors.

- Reshaping: Sometimes you need to change the shape of a tensor without changing its data. This is done with

tensor.view()ortensor.reshape()in PyTorch.

For example, if you have a tensor of shape [2, 3] and you want a [3, 2], you could do x_view = x.view(3, 2). The new tensor will share data with the original (view is like a different lens on the same data), so changing one will change the other. If you want a copy with new shape, use reshape (or clone then view).

Example:

y = torch.tensor([[1, 2, 3], [4, 5, 6]])

y2 = y.view(3, 2)

print("y shape:", y.shape, "\ny2 shape:", y2.shape)

print("y2:\n", y2)

This will print that y is torch.Size([2, 3]) and y2 is torch.Size([3, 2]), and y2’s content will look like a 3×2 matrix:

tensor([[1, 2],

[3, 4],

[5, 6]])

Essentially, we took the 2×3 matrix and laid it out as 3×2. Reshaping is useful when you need to feed data into certain functions or networks that expect a specific shape (for instance, flattening a 2D image into a 1D vector for a neural network, or adding an extra dimension for batch size).

- Arithmetic: You can perform elementwise arithmetic on tensors just by using operators or PyTorch functions. For instance,

a + badds two tensors of the same shape,a * bmultiplies elementwise, etc. You can also do operations like matrix multiplication usingtorch.mmor the@operator in Python.

Simple example:

a = torch.tensor([1.0, 2.0, 3.0])

b = torch.tensor([0.1, 0.2, 0.3])

print("a + b:", a + b) # elementwise addition

print("a * 2:", a * 2) # elementwise multiplication by scalar

print("a * b:", a * b) # elementwise multiplication (Hadamard product)

print("Dot product of a and b:", torch.dot(a, b))

Output:

a + b: tensor([1.1000, 2.2000, 3.3000]) a * 2: tensor([2., 4., 6.]) a * b: tensor([0.1000, 0.4000, 0.9000]) Dot product of a and b: tensor(1.4000)

The dot product (1.4) was computed as 1.0*0.1 + 2.0*0.2 + 3.0*0.3. If a and b were 2D (matrices), a * b would still do elementwise multiply; to do matrix multiplication you’d use a.matmul(b) or a @ b.

- Moving to GPU: In deep learning, computations can be very demanding. A GPU (Graphics Processing Unit) is designed to handle large amounts of calculations much faster than a CPU (Central Processing Unit).

Why Use a GPU? (Simple Analogy)

- Imagine you’re doing long division by hand (CPU method). It takes time because you solve one step at a time.

- Now, imagine 100 people solving 100 problems at the same time (GPU method). Since GPUs can process multiple tasks in parallel, they are much faster than CPUs for machine learning tasks.

In short: GPUs speed up deep learning by handling many calculations at once!

If you have a GPU available, you can move a tensor from the CPU to GPU like this:

import torch

# Create a tensor on CPU

a = torch.tensor([1.0, 2.0, 3.0])

# Move it to GPU

a_gpu = a.to('cuda')

print("Tensor on GPU:", a_gpu)

If you see this error:

AssertionError: Torch not compiled with CUDA enabledu)

Don’t worry! This just means your PyTorch installation is running in CPU-only mode (no GPU support).

For now: Your code will still work fine on CPU. Just remove .to('cuda'), and it will run without issues.

In future posts, we’ll cover how to install GPU-supported PyTorch and use CUDA for faster computations.

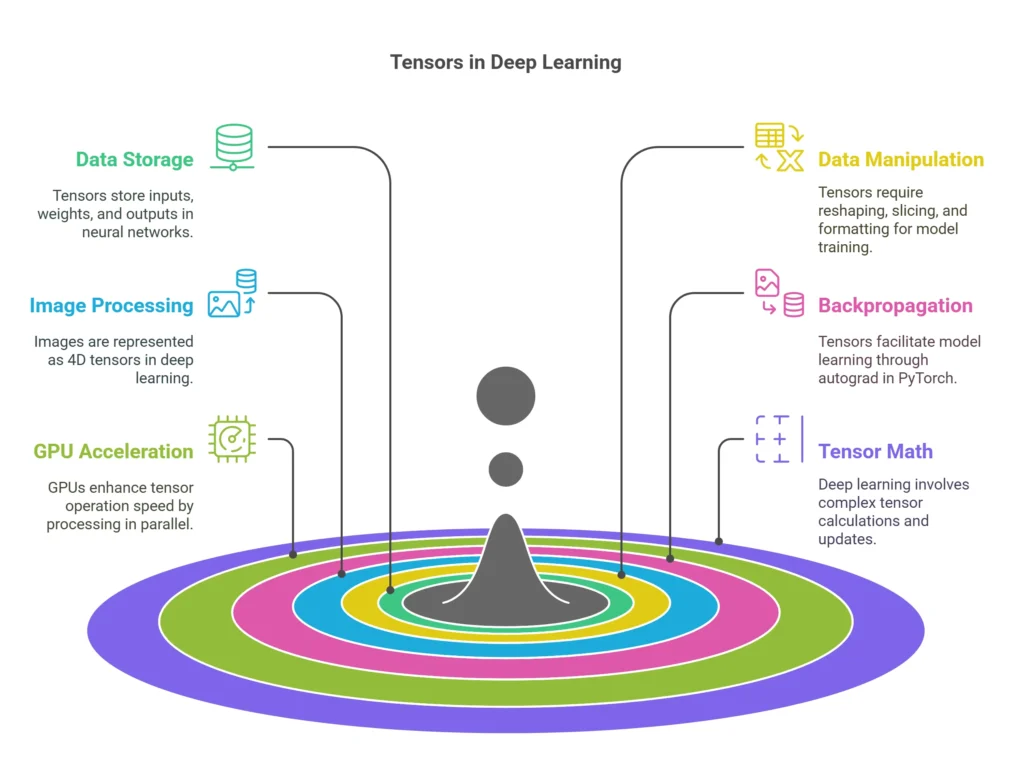

Why Tensors Matter in ML

Tensors are the main building blocks of deep learning. If you’re working with neural networks, you’re already working with tensors—even if you don’t realize it! Here’s why they matter:

Everything in a neural network is stored as tensors:

- Inputs → The data you feed into the model (e.g., images, text, numbers).

- Weights → The values the model adjusts to learn patterns.

- Outputs → The model’s predictions or final results.

You need to manipulate tensors to prepare data:

- Changing their shape (reshaping)

- Extracting parts of the data (slicing)

- Formatting them correctly for training

Images in deep learning are stored as 4D tensors:

- Think of it as a stack of images with four dimensions:

(batch size × color channels × height × width)- Example: A batch of 10 color images, each 28×28 pixels → Tensor shape =

[10, 3, 28, 28]

- Think of it as a stack of images with four dimensions:

Tensors also help models “learn” using backpropagation:

- PyTorch automatically tracks changes in tensors so it can adjust model weights (this process is called autograd).

GPUs make tensor operations much faster!

- Instead of doing calculations one-by-one (like a CPU), a GPU processes many tensors at the same time, speeding up training.

Deep learning is just a bunch of tensor math!

- The model is constantly performing matrix multiplications, additions, and updates on tensors to improve accuracy.

By understanding tensors, you’re understanding how deep learning models actually work!

A Quick Practical Example: Let’s say we want to do a very simple linear regression using PyTorch tensors (without invoking scikit-learn). We want to fit a line to a small set of points. We can use PyTorch to compute the solution analytically or by a simple gradient descent. Here’s a basic illustration using normal equations (analytic solution):

In this example, we’ll find the equation of a straight line that fits a set of points.

We assume the relationship follows this formula:

- = input values (features)

- = target values (outputs)

- = weight (slope of the line)

- = bias (y-intercept)

Step 1: Create Data

We have three data points:

Here:

x values → [1, 2, 3]

y values → [2, 4, 6]

import torch # Create tensors for x (inputs) and y (targets) X = torch.tensor([[1.0], [2.0], [3.0]]) # Shape: (3,1) y = torch.tensor([[2.0], [4.0], [6.0]]) # Shape: (3,1)

Step 2: Add a Bias Term

- In linear regression, we also need a bias term ( b ).

- We do this by adding a column of ones to our xvalues.

# Add a column of ones for the bias term ones = torch.ones((3, 1)) # Column of ones (shape: 3x1) X_aug = torch.cat([X, ones], dim=1) # Concatenate x values with ones

Now, X_aug looks like this:

tensor([[1.0, 1.0],

[2.0, 1.0],

[3.0, 1.0]])

- First column = original x values

- Second column = ones (bias term)

Step 3: Solve for and Using the Normal Equation

The normal equation is a formula used in linear regression to directly compute the best values for and :

Now that you’ve watched the video…

- You understand how the Normal Equation finds the best wandb.

- In the next section, we’ll apply this in PyTorch using tensors.

- If you need a quick refresher later, feel free to come back to this video anytime!

# Compute X^T * X (2x2 matrix) XTX = X_aug.t().mm(X_aug) # Compute X^T * y (2x1 matrix) XTy = X_aug.t().mm(y) # Solve for [w, b] using linear algebra params = torch.linalg.solve(XTX, XTy) # Extract w and b as numbers w, b = params[0].item(), params[1].item()

Step 4: Print the Solution

print(f"Solved parameters: w = {w:.3f}, b = {b:.3f}")

Expected Output:

Solved parameters: w = 2.000, b = 0.000

What Just Happened?

- We solved for w and b using linear algebra.

- The equation of the best-fit line is:

In this snippet, we used tensor operations for matrix multiplication (.mm) and solving linear equations. We concatenated tensors to add a bias column. All those torch. operations treat data as tensors.

Wrapping Up

Tensors are the backbone of how data is represented in PyTorch. By getting comfortable with creating and manipulating tensors, you set yourself up to understand deeper topics like building neural network layers (which are essentially operations on tensors) and interpreting how data flows through those networks. We covered how to make tensors, check their shape and type, and do basic operations. As you progress, you’ll encounter more advanced tensor operations (like convolutions for image data, or slicing based on conditions, etc.), but they all build on this foundation.

Feel free to experiment in a Jupyter Notebook: create some tensors, perform arithmetic, and try moving data to GPU if you have one. The more you practice, the more second-nature it will become.