Table of Contents

ToggleIntroduction

Machine learning (ML) is one of the most exciting fields within artificial intelligence. It’s the technology behind things like personalized recommendations, voice recognition, and even self-driving cars. But what exactly is machine learning? In simple terms, machine learning is a way for computers to learn from data instead of being explicitly programmed with fixed rules. This beginner-friendly guide will explain what ML means, how it works at a high level, and give practical examples of machine learning in action. By the end, you’ll understand how machines can improve at tasks with experience, and why ML is such a powerful tool in modern technology and business.

What is Machine Learning?

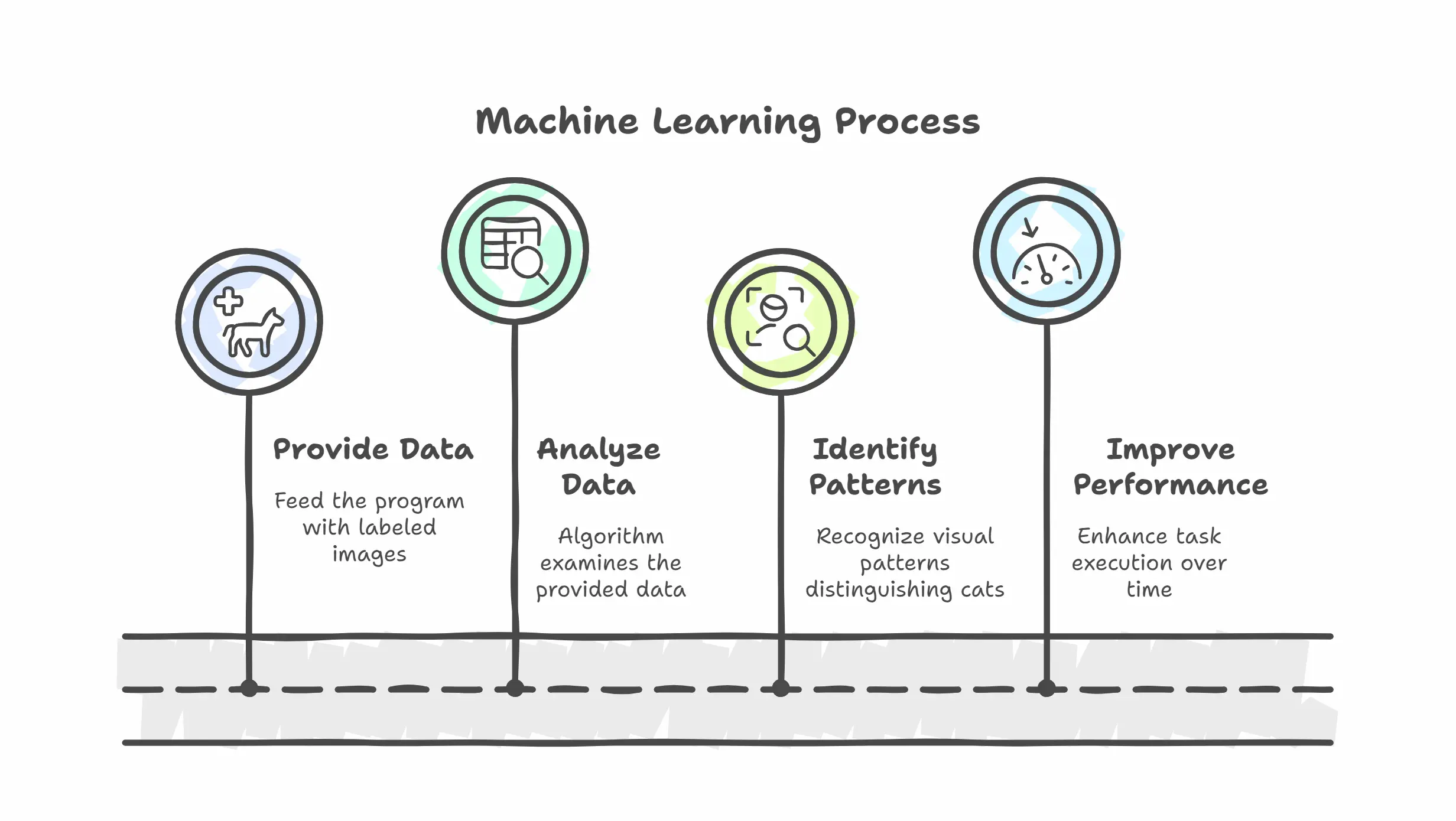

Machine learning is all about enabling computers to improve their performance on a task by learning from experience. Instead of a programmer hand-coding every decision or rule, the computer is given data and a general algorithm that it uses to figure out patterns on its own. In plain English, if you give a machine learning program a lot of examples of how to do something, it will get better at that task over time. For instance, imagine trying to teach a computer to recognize cats in photos: rather than coding rules for what a cat looks like, you would feed the program many labeled images of cats and dogs, and the ML algorithm will learn the visual patterns that distinguish cats.

How Does ML Work (Basics)

The core idea of ML is training a model using data. You start with a bunch of training examples and feed them to a learning algorithm, which gradually adjusts a model to fit the data. Imagine you want to build an email spam filter. Instead of writing countless if-then rules to catch spam, you can use machine learning: gather a large collection of emails labeled as “spam” or “not spam.” This labeled dataset becomes your training data (the experience). You then feed these examples to a learning algorithm (the training process). Over time, the algorithm finds patterns – perhaps it learns that emails containing certain words or sent at 2 AM are more likely to be spam. The output of this training is a model that can then evaluate new emails and predict whether they are spam or not. The more examples the model trains on, the better it can learn the presice differences, thereby improving its performance with experience.

Most machine learning works in a loop of trial and error: the model makes a prediction on training data, checks how far off it is from the true answer, then adjusts its internal parameters to do better. This is repeated many times. Eventually, the model gets good at the task (for example, accurately flagging spam emails) by capturing the patterns in the training data. A key goal is that the model also works well on new data it hasn’t seen before (like a brand-new email in your inbox) – this ability to generalize to unseen examples is what makes machine learning so powerful compared to a fixed set of rules.

Types of Machine Learning

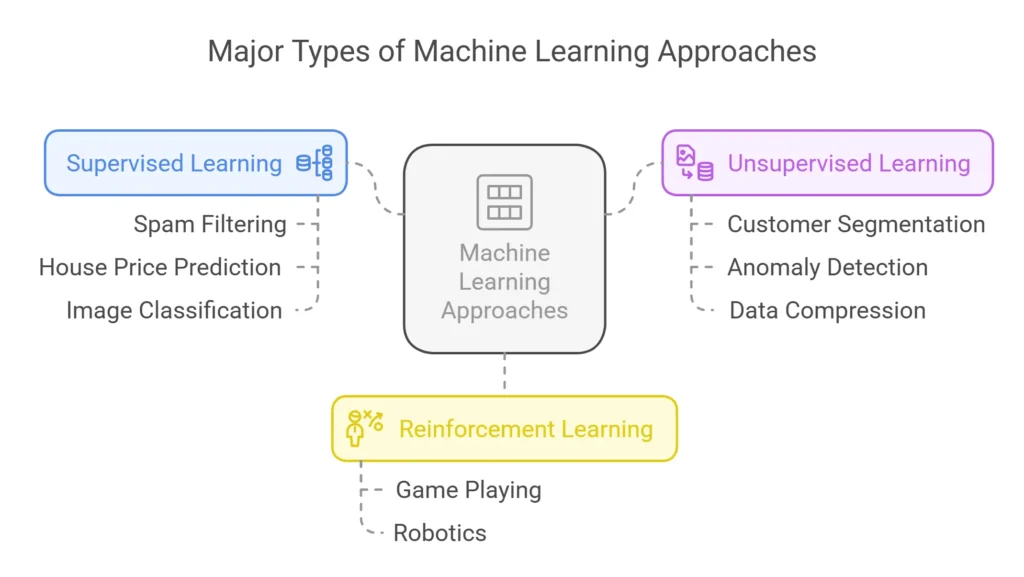

There are a few major types of machine learning approaches, primarily distinguished by how the algorithm learns from the data:

- Supervised Learning: The algorithm learns from labeled examples. Each training example comes with an input and a correct output (label). It’s called supervised because it’s like learning with a teacher providing the answers. Our spam filter example is supervised learning: each email (input) in the training set has a label “spam” or “not spam” (the desired output). The ML model tries to predict the label and is corrected when it’s wrong, so it gradually learns to make the right predictions. Other examples of supervised learning include predicting house prices from historical data (inputs could be house features like size, location, and the label is the price), or classifying images of animals (inputs are the images, labels might be “cat,” “dog,” etc. for each image). Many of the AI systems that have clear goals (like diagnosis from medical tests, or credit scoring from financial data) use supervised learning.

- Unsupervised Learning: The algorithm learns from unlabeled data. Here, we do not provide the correct answers – the program has to find structure in the data on its own. It’s like learning without a teacher. For example, imagine a retail business has transaction data from thousands of customers but no labels or outcomes. An unsupervised learning algorithm (like a clustering algorithm) could analyze the purchase histories and group customers into segments with similar buying habits, without anyone telling it what the “right” groups are. The algorithm might notice, say, one group of customers that buys mostly baby products and another group that buys mostly sports gear – identifying natural clusters in the data. Unsupervised learning is useful for discovery – it can reveal patterns like grouping similar customers, detecting unusual transactions (anomaly detection), or simplifying data complexity (through techniques like compression or dimensionality reduction) without predefined categories. Because there are no given labels or correct outputs, success in unsupervised learning is a bit subjective – it’s about whether the patterns found are meaningful or useful.

(There is also reinforcement learning, where an algorithm learns by trial-and-error and gets rewards or penalties – like training a game-playing AI by letting it play and rewarding it for winning moves. Reinforcement learning is powerful (it’s how AIs learned to beat humans at Go and chess), but it’s a more advanced approach used in specific scenarios like games and robotics. In this beginner series, we’ll focus on the more common supervised and unsupervised learning.)

Key Differences at a Glance: Supervised vs. Unsupervised

- Data: Supervised learning uses labeled data (each example has an input and a known output). Unsupervised learning uses unlabeled data (only inputs, no explicit correct output).

- Objective: Supervised algorithms aim to predict an outcome or label for new data based on learning from examples (e.g., predict spam vs not spam). Unsupervised algorithms aim to find hidden patterns or inherent structures in data (e.g., group customers into clusters) without predefined targets.

- Examples of Results: A supervised learning model might output a classifier or predictor – for example, a model that takes an email and outputs “spam” or “not spam,” or a model that predicts a house’s price. An unsupervised learning result could be groupings of data (clusters of similar customers) or new representations of data (like simplified features from a compression algorithm).

- Evaluation: Supervised models can be evaluated objectively using metrics like accuracy or error rate because we have ground truth labels to compare against (we can check if the model’s prediction was correct). Unsupervised results are harder to evaluate automatically – since there’s no single “right answer,” we often judge them by usefulness (e.g., do the customer segments make business sense?) or use domain knowledge to validate the patterns.

Real-World Applications and Examples

To make these concepts concrete, let’s look at scenarios where supervised and unsupervised learning are each useful:

- Supervised Learning Example – Spam Detection: As discussed, email spam filtering is a classic supervised learning task. The algorithm is trained on a historical dataset of emails where each email is clearly labeled as spam or not. From this, it learns the characteristics of spam emails. When a new email arrives, the model predicts the label (spam or not) based on what it learned. The quality of the model can be measured by how many spam emails it catches (and how few good emails it mistakenly flags). Many real-world problems are like this, where we have past data with outcomes and want to predict future outcomes: for instance, predicting whether a customer will churn (cancel a subscription) based on their usage history, or detecting fraudulent credit card transactions based on past labeled examples of fraud vs. legitimate transactions.

- Unsupervised Learning Example – Customer Segmentation: A marketing team might have a large database of customer records and want to understand distinct groups of customers to target promotions. They might use unsupervised learning (clustering) to automatically discover segments. The algorithm could group customers into, say, “budget-conscious buyers,” “frequent premium shoppers,” and “seasonal shoppers,” based on patterns in their purchase data – all without the team pre-defining these categories. This can guide business strategy (like which group to send a discount to). Another example: unsupervised learning can be used on news articles to group articles by topic, even if those topics aren’t predefined – the algorithm might notice clusters of articles that correspond to politics, sports, entertainment, etc. simply by analyzing word frequencies.

When to Use Which?

Choosing between supervised and unsupervised learning depends on your goal and the data you have: If you have a specific target outcome you want to predict and historical data telling you what that outcome is (labels), then supervised learning is the way to go. It will learn the relationship between your input data and the target. On the other hand, if you just have a lot of data and want to explore it, find structure, or summarize it without a particular prediction goal, unsupervised learning is very useful. It can uncover groupings, anomalies, or compress the data to fewer dimensions. Often, unsupervised learning is a first step in understanding data, while supervised learning is used when you have a clear prediction or classification task.

For example, imagine a bank: If the bank wants to predict whether a loan applicant will default on a loan (yes/no outcome), that’s a supervised learning problem (they have past loans labeled as repaid or defaulted to train on). But if the bank wants to simply categorize customers into different risk or behavior profiles without predefined labels, that’s an unsupervised clustering problem. Each approach serves different needs. In practice, data scientists often use both: they might cluster data to gain insights (unsupervised) and then use those insights to inform a supervised model for a specific prediction.

Understanding these two learning paradigms is fundamental before moving on to actually building models. In our upcoming tutorial post, we will apply supervised learning to build our first ML model step-by-step. And remember, if you encounter any unfamiliar terms while exploring machine learning, our AI & Machine Learning Glossary Post is there to help clarify those concepts. Additionally, read the post “Supervised vs. Unsupervised Learning: Key Differences and Real-World Applications,“ where we compare these approaches in detail, and then we’ll get hands-on with a simple project!