Table of Contents

ToggleIntroduction

When diving into machine learning, one of the first distinctions to understand is supervised learning vs. unsupervised learning. These are two fundamental approaches that differ in how they learn from data. In this post, we’ll explain each approach in beginner-friendly terms, highlight their key differences, and look at real-world applications of both. By understanding supervised and unsupervised learning side by side, you’ll be better equipped to choose the right approach for a given problem and appreciate what kinds of tasks each can tackle.

What is Supervised Learning?

Supervised learning is like learning with an answer key. The algorithm is provided with example inputs and explicit correct outputs (labels) during training. It’s called “supervised” because the training data acts as a teacher – for each example, the algorithm can check if its prediction is right or wrong against the known answer and adjust accordingly.

To illustrate, imagine you’re teaching a child to differentiate between dogs and cats. You show them a picture of an animal (input) and tell them whether it’s a dog or a cat (label). Over time, the child learns characteristics (like dogs tend to be larger or have certain snouts, cats have distinct eyes and ear shapes, etc.) from these labeled examples. Similarly, in supervised machine learning, we might feed a computer a dataset of pet images with each image labeled “dog” or “cat.” The ML model gradually learns to map from the image to the correct label by seeing many examples.

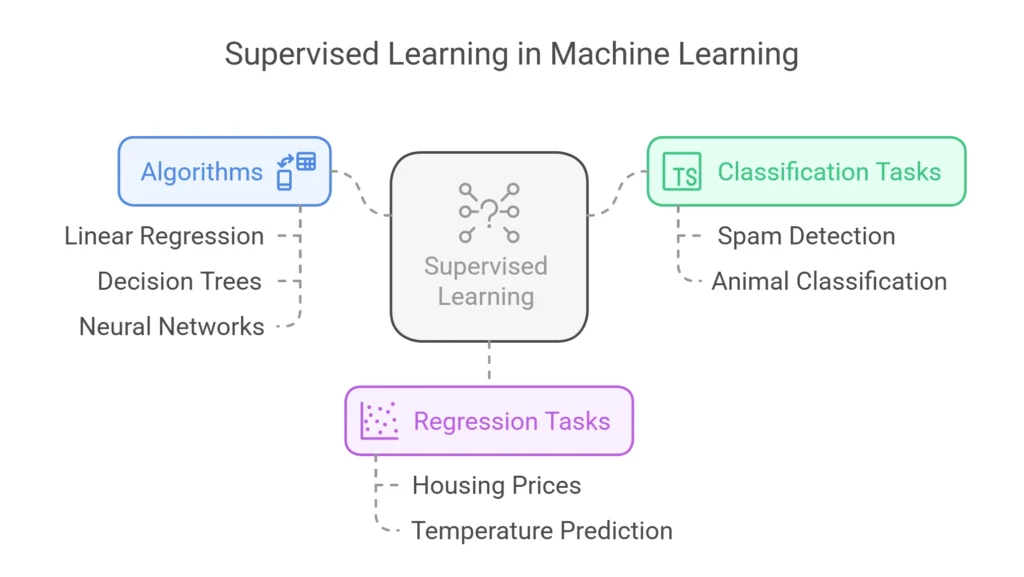

Supervised learning can handle classification tasks (predicting a category, like spam vs. not spam email, or dog vs. cat) and regression tasks (predicting a continuous value, like housing prices or tomorrow’s temperature). Common supervised algorithms include linear regression, logistic regression, decision trees, support vector machines, and neural networks. For example, a linear regression algorithm could learn the relationship between house features and sale price (predicting prices for new houses), while a decision tree might learn to classify loan applicants as low or high risk based on historical loan data. The hallmark of supervised learning is that we have ground truth to learn from – the algorithm’s job is to generalize from those examples so it can make accurate predictions on new, unseen data.

What is Unsupervised Learning?

Unsupervised learning is like exploring without a map – the algorithm is given data without any labeled answers and must find structure on its own. There’s no teacher or correct answer guiding the learning. Instead, unsupervised learning algorithms try to discover interesting patterns, groupings, or features in the data.

A classic example of unsupervised learning is clustering. Imagine a marketing team has a large customer dataset with various attributes (age, spending habits, products bought, etc.) but no predefined categories of customers. An unsupervised algorithm (such as k-means clustering) can analyze this data and group customers into clusters that share similar characteristics. The result might be that it finds, say, three distinct groups: (1) young budget-conscious shoppers, (2) middle-aged high-value customers, and (3) older customers who purchase specific product lines. The important thing is that the algorithm decided these groupings itself – we didn’t tell it what patterns to look for or how many groups to make; it identified the clusters based on the data structure.

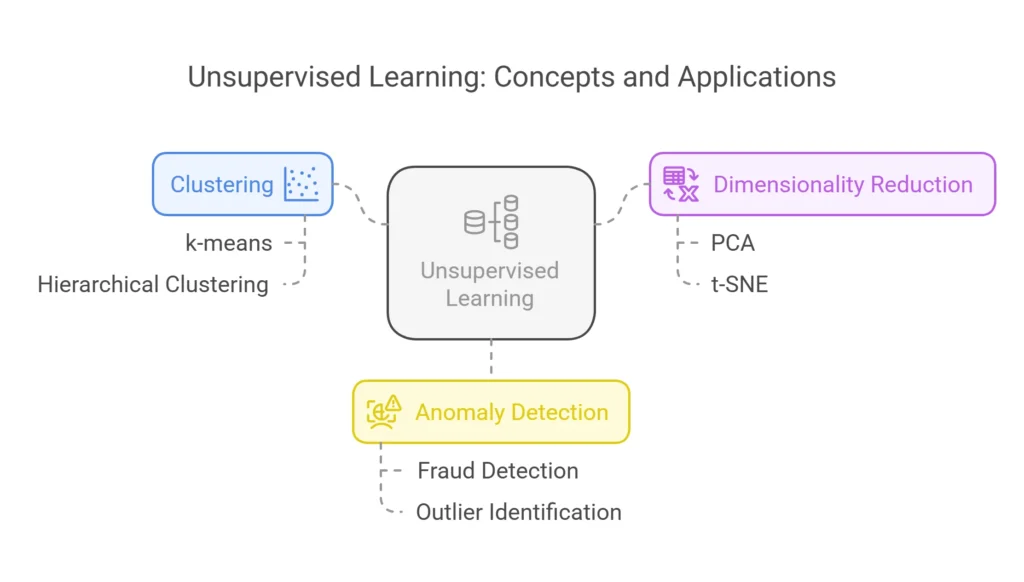

Another use of unsupervised learning is dimensionality reduction (like PCA – Principal Component Analysis). This is used to simplify complex datasets by finding new, simpler representations of the data that capture the most important information. For instance, reducing the number of features in a dataset while retaining the variance. Unsupervised learning is also used for anomaly detection – identifying outliers in data (like fraudulent transactions that look very different from normal transactions) without being explicitly told what counts as “fraud” versus “legit” in advance.

Common unsupervised algorithms include clustering methods (k-means, hierarchical clustering, DBSCAN), and dimensionality reduction techniques (PCA, t-SNE). The results of unsupervised learning might be less immediately interpretable as “right” or “wrong” because there is no single correct answer. Instead, we evaluate these results by how useful or sensible they are. In our example, the marketing team would evaluate if the discovered customer segments are meaningful for their business (e.g., can they tailor marketing strategies to these groups?).

Key Differences Between Supervised and Unsupervised Learning

It’s clear that supervised and unsupervised learning serve different purposes. Here’s a quick rundown of their key differences:

- Labeled vs. Unlabeled Data: Supervised learning uses labeled data – for every input, the desired output is provided in the training set. Unsupervised learning uses unlabeled data – it only has inputs and must find structure without any given outputs.

- Goal: Supervised learning’s goal is to learn a mapping from inputs to a known output (so you can predict the outputs on new inputs). Unsupervised learning’s goal is to find hidden patterns or groupings in the data (with no specific output to predict).

- Examples of Output: A supervised learning model produces predictions or classifications (e.g., “predict price = $250K” or “label = Spam”). An unsupervised learning model produces groupings (e.g., clusters of similar customers) or new feature representations (e.g., compressed dimensions), rather than a straightforward prediction.

- Feedback and Evaluation: In supervised learning, the model can be directly evaluated during training by comparing its predictions to the known labels (using metrics like accuracy for classification or mean squared error for regression). The learning is guided by this feedback loop of errors. In unsupervised learning, there’s no direct error feedback since no labels are available – evaluation is more about validation via domain knowledge or indirect metrics (like cluster cohesion or silhouette score for clustering). The learning is more exploratory.

Real-World Applications

Most practical AI applications use one (or both) of these learning types:

- Supervised Learning Applications: This approach shines whenever you have historical data with outcomes and want to predict future outcomes. For example, in healthcare, supervised learning is used to predict patient outcomes (like disease diagnosis or readmission risk) from medical records, because we have data on past patients with known outcomes to train on. In finance, it’s used for credit scoring – given past loan applicants with labels “repaid” or “defaulted,” a model learns to predict default risk for new applicants. Even everyday tech like handwriting recognition on your phone or email sorting (priority inbox) uses supervised learning, trained on labeled examples of characters or past user behaviors. The common thread: there’s a clear target variable to learn.

- Unsupervised Learning Applications: This approach is used when you need to make sense of a lot of data without explicit instructions on what to look for. In marketing, as mentioned, customer segmentation for personalized outreach is a key example. In biology, unsupervised learning helps in gene expression analysis – clustering genes with similar expression patterns to hypothesize their related functions. Recommendation systems sometimes use unsupervised techniques (like clustering or matrix factorization) to find latent factors in user preferences. Anomaly detection in cybersecurity (identifying unusual network activity that could indicate a cyber attack) often starts unsupervised, since you might not have labels for “attack” vs “normal” for all scenarios; the system flags data points that don’t fit any learned pattern. Essentially, unsupervised learning is great for exploring data, compressing data, and finding signals that might not be immediately obvious.

Choosing the Right Approach

Often, the decision between supervised and unsupervised learning comes down to the question: “Do I have labeled outcome data for what I’m trying to achieve?” If yes, you’ll likely formulate the problem as supervised learning. If no, you’ll look to unsupervised techniques to gain insights. It’s also not uncommon to use them in tandem. For example, you might use unsupervised learning to discover categories or features in data, and then use those as inputs to a supervised learning model for a specific prediction task.

To ground this in a scenario, consider an e-commerce platform: They might first apply unsupervised learning to cluster products or customers into categories based on browsing patterns. Then, to improve sales, they use supervised learning to predict which customers are likely to respond to a particular promotion (using a labeled dataset of past campaign responses). By combining both approaches, they get the best of discovery and prediction.

Understanding these paradigms is fundamental in machine learning. In other posts, we’ll actually implement a simple supervised learning model step-by-step (see “Build Your First Machine Learning Model in Python” post). We’ll also continue to mention whether a technique is supervised or unsupervised as we introduce new concepts. And if you encounter any unfamiliar terms along the way, remember you can always refer to our Glossary for quick definitions.