Introduction

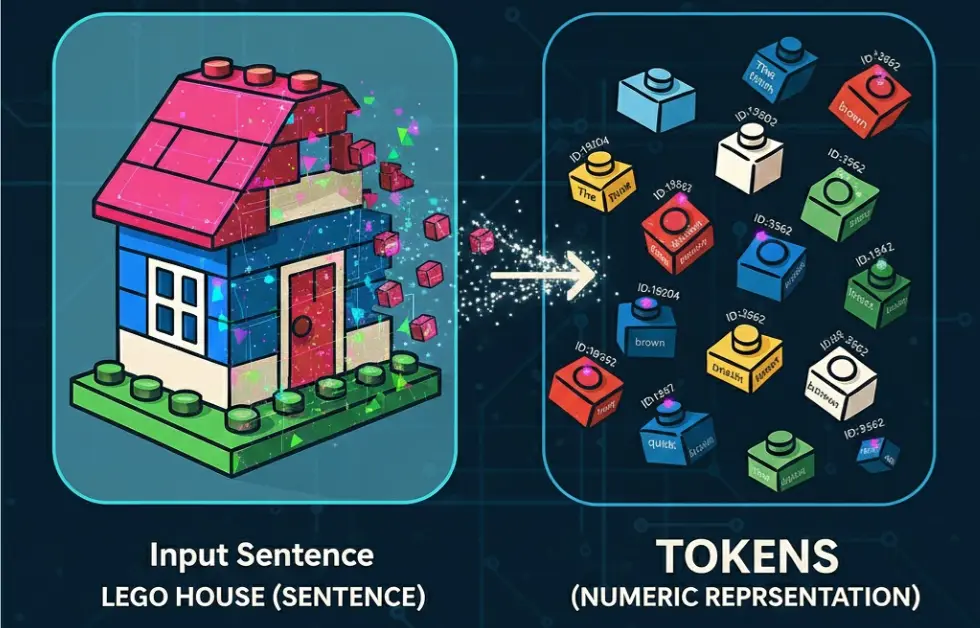

When we interact with an AI model, it feels like the system understands our words instantly, just like a human would. But that’s not what happens internally. AI models cannot read text directly. They cannot interpret letters, punctuation, or full sentences the way we do. Instead, they rely entirely on numbers. Tokenization is the crucial first step that transforms your text into a form the model can understand.

To simplify this idea, imagine a sentence as a completed LEGO house. If you want someone else to study how the house is built, you wouldn’t hand them the final model in one piece. You would dismantle it into individual LEGO bricks so they can see the structure and rebuild it themselves. Tokenization works exactly the same way. Instead of giving the AI your entire sentence as one unit, you break it down into small pieces called tokens. Each token becomes one LEGO brick the model can process.

Concept 1: What Are Tokens?

A token is a small unit of text. But what counts as “small” can vary. There are several levels of tokenization, and each model chooses one depending on what it was trained for.

✅ Word-Level Tokenization

This is the simplest form. Each whole word becomes one token.

Example:

“deep learning” → [“deep”, “learning”]

This feels intuitive, but it has a problem: AI cannot handle new words it has never seen before. If the vocabulary doesn’t contain the exact word “electrification”, the model won’t know what to do with it.

✅ Subword-Level Tokenization (Most Common Today)

Modern models like GPT and BERT break words into meaningful pieces.

Example:

“understanding” → [“under”, “##stand”, “##ing”]

This solves the “new words” problem because even unfamiliar long words contain familiar sub-pieces.

✅ Character-Level Tokenization

Here, every single character becomes a token.

Example:

“AI” → [“A”, “I”]

“learning” → [“l”, “e”, “a”, “r”, “n”, “i”, “n”, “g”]

Why would anyone tokenize at this level?

- It gives maximum flexibility

- The model can handle any text (including typos or unknown symbols)

But character-level tokenization makes sequences much longer because a single word becomes many tokens, which increases processing time and memory usage.

Now you see how each approach has trade-offs. Modern language models typically use subword tokenization because it finds the right balance between flexibility, meaning, and efficiency.

Concept 2: Vocabulary — The AI’s Dictionary

Once tokens are defined, the AI model builds a vocabulary, its dictionary of all the tokens it can recognize.

If the vocabulary contains 30,000 tokens, it means:

- Every token the model understands is one of these 30,000

- Each token has a unique ID

- Every text input must be broken into these tokens

Example vocabulary entries:

"play" → 4721

"##ing" → 3012

"AI" → 8275

"." → 1012

This list is created during training and never changes afterward. If a word doesn’t exist in the vocabulary, the tokenizer breaks it into known sub-parts.

Concept 3: Token IDs — What the Model Actually Sees

Tokenization doesn’t stop at splitting text. Each token is converted into a number called a token ID.

Example:

Tokens:["Token", "##ization", "is", "essential"]

Token IDs:[19204, 3562, 2003, 7278]

These numeric IDs are the true input. The AI never sees words, it sees sequences of numbers.

Inside the model, these IDs are transformed into vectors (embeddings), but that is a topic for another article.

Concept 4: Sequence Length — How Much the Model Can “Read” at Once

Sequence length represents how many tokens are in your input.

This matters because every model has a token limit.

- Old models: 512 tokens

- GPT-3: 2048 tokens

- GPT-3.5: 4096 tokens

- LLaMA 3: 8k to 70k tokens

- GPT-4 Turbo: up to 128k tokens

If your text exceeds the limit, the model will:

- Cut the beginning

- Cut the end

- Or refuse the input altogether

This affects summarization, conversation memory, long documents, and cost. Everything in LLM workflows is tied to token count.

Concept 5: Why Tokenization Matters

Tokenization affects almost every part of AI:

- Understanding: Smaller units help the model interpret meaning more accurately.

- Context: The number of tokens determines how much the model can “remember” at once.

- Speed: Fewer tokens → faster responses.

- Cost: Paid APIs charge per token.

- Accuracy: Clean tokens reduce ambiguity and errors.

Without tokenization, language models simply would not function.

Tokenization in Python (Practical Example)

Install the Transformers library:

pip install transformers

Tokenizing with BERT:

from transformers import BertTokenizer

tokenizer = BertTokenizer.from_pretrained("bert-base-uncased")

sentence = "Tokenization is essential for understanding AI."

tokens = tokenizer.tokenize(sentence)

print("Tokens:", tokens)

token_ids = tokenizer.encode(sentence)

print("Token IDs:", token_ids)

Running the BERT tokenizer on the sentence:

“Tokenization is essential for understanding AI.”

produces the following tokens:

Tokens: ['token', '##ization', 'is', 'essential', 'for', 'understanding', 'ai', '.']

Token IDs: [101, 19204, 3989, 2003, 6827, 2005, 4824, 9932, 1012, 102]

💡 Small Note on Token Count: Although the sentence breaks down into 8 content tokens, the final list of

Token IDshas 10 numbers (101at the start and102at the end). These extra IDs represent special tokens like[CLS](Classification) and[SEP](Separator) that the model needs to understand the sequence structure.

BERT splits the word “Tokenization” into two subwords; “token” and “##ization”, because BERT uses a WordPiece tokenizer.

In total, this sentence becomes 8 tokens, which BERT then converts into numeric token IDs that the model can process internally.

💡 Note: BPE (The GPT/LLaMA Method)

While our BERT example uses WordPiece (a style of subword tokenization), the majority of powerful modern LLMs (like GPT, GPT-4, and LLaMA) rely on Byte Pair Encoding (BPE).

BPE is a simple, iterative process that creates a smart vocabulary by repeatedly merging the most frequent adjacent pair of characters/subwords into a single new token. This balance ensures efficiency (common words are single tokens) and flexibility (rare words are broken down into known chunks).

For a more detailed comparison of BPE, WordPiece, and other methods, see the Hugging Face Summary of Tokenizers.

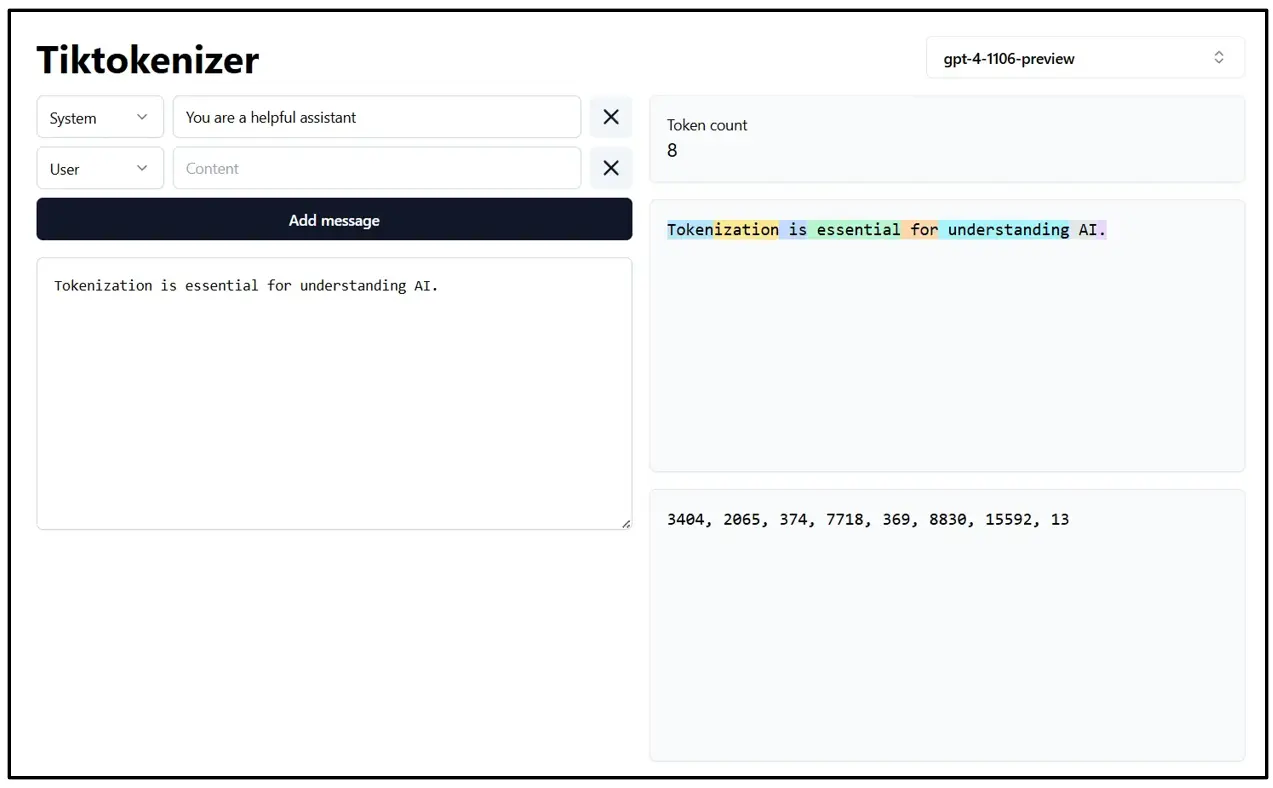

If you want to experiment with how other models tokenize the same sentence, you can use this interactive online tokenizer tool:

👉 https://tiktokenizer.vercel.app/

It lets you test how the text is tokenized across different models such as GPT-2, GPT-3.5, GPT-4o, LLaMA, Mistral, and more.

Just paste your sentence and select a model to instantly see:

- how many tokens it generates

- how each model splits words differently

- the numeric token IDs used internally

This is a great way to visually compare tokenization methods and understand why different models produce different token counts.

Frequently Asked Questions

What is meant by tokenization?

Tokenization is the process of breaking text into smaller units called tokens. These tokens can be words, subwords, or characters. AI models use tokenization to convert text into numerical form so they can understand and process language.

What is a simple example of tokenization?

A simple example is splitting the sentence:

“I love AI.”

into the tokens:

[“I”, “love”, “AI”, “.”]

This helps the model understand each part separately.

What is tokenization in LLMs?

In Large Language Models (LLMs), tokenization means breaking text into tokens that the model can convert into numeric IDs. These IDs are then transformed into embeddings, allowing the model to “understand” and generate text. Different LLMs use different tokenizers, so the number of tokens may vary for the same sentence.

Conclusion

Tokenization is the quiet but essential step that allows AI models to read and process text. It breaks language into smaller pieces, assigns them IDs, and prepares everything for deeper processing such as embeddings and attention. Once you understand tokens, vocabularies, token IDs, and sequence limits, you have the foundation needed to understand almost every topic in NLP.

If you want to explore more core AI concepts, visit the AI & ML Glossary for clear definitions of related terms.

In future posts, we’ll explore embeddings, vocabulary size, positional encoding, and how tokenization impacts cost and model performance.

If you’d like to get in touch, feel free to use our Contact Page.

For more articles, tutorials, and guides, check out our Blog Page.